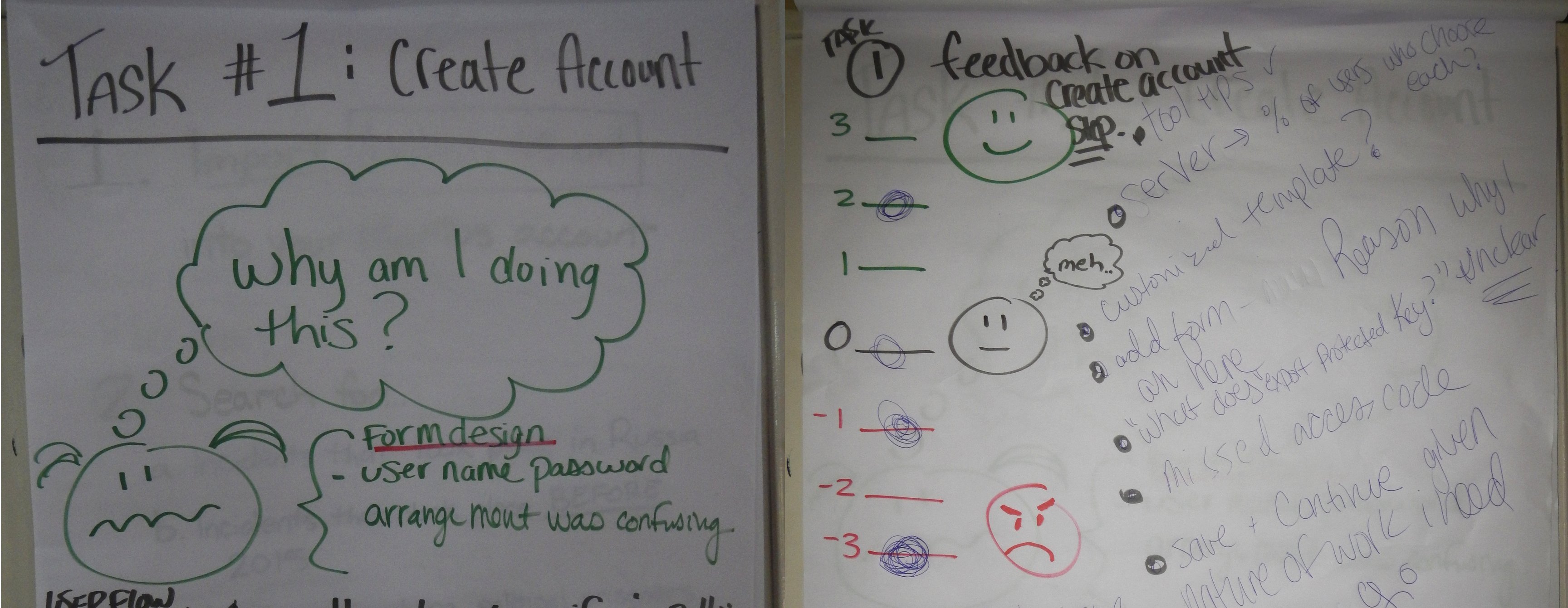

During our first Tool Feedback Training, we faced some natural reluctance to “complain” about a tool being trained upon, which was magnified by the effect of having a representative from the tool in the training. To accommodate this, we leveraged the high number of facilitators during each hands-on activity to gather observational data. The trainer would set a short goal (create an account, create a record, share a record), and the participants would work in small groups to accomplish this task, with a facilitator observing each group to note where confusion or problems were occurring. To balance this with maintaining the flow of the training, the facilitators would keep each group from floundering, and the trainer would review the process for everyone before moving onwards.

This essentially provided user testing during the ADIDS “deepening” stage by encouraging exploration to achieve these goals. Between activities, the facilitators could dive in and ask more about the problematic parts of the process without embarrassing either the tool developer or individual participants.

We brought this same strategy to the second TFT, and had challenges working across language barriers. This approach requires both a hands-on session with very definable “steps”, and a grouping structure that also works with the participants, the size of the group, and any translation needs.

We approached this slightly differently at each training, and it provided valuable insights into tool usability with specific pain-points from users. Some version of this could be worked into the broader digital security training process to create a feedback loop from trainers, representing their participants, and tool developers.

At the UXForum next week we will be talking about how different approaches worked across the different communities we worked with to find any common approaches to recommend.